When Machines Outsmart Humans

(c) 2000, Nick Bostrom

Futures. Vol. 35:7, pp. 759 - 764, with a symposium organized around my article. For my reply to the commentaries, see here.

SUMMARY

Artificial intelligence is a possibility that should not be ignored in any serious thinking about the future, and it raises many profound issues for ethics and public policy that philosophers ought to start thinking about. This article outlines the case for thinking that human-level machine intelligence might well appear within the next half century. It then explains four immediate consequences of such a development, and argues that machine intelligence would have a revolutionary impact on a wide range of the social, political, economic, commercial, technological, scientific and environmental issues that humanity will face over the coming decades.

The annals of artificial intelligence are littered with broken promises. Half a century after the first electric computer, we still have nothing that even resembles an intelligent machine, if by ‘intelligent’ we mean possessing the kind of general-purpose smartness that we humans pride ourselves of. Maybe we will never manage to build real artificial intelligence. The problem could be too difficult for human brains ever to solve. Those who find the prospect of machines surpassing us in general intellectual abilities threatening may even hope that is the case.

However, neither the fact that machine intelligence would be scary nor the fact that some past predictions were wrong is a good ground for concluding that artificial intelligence will never be created. Indeed, to assume that artificial intelligence is impossible or will take thousands of years to develop seems at least as unwarranted as to make the opposite assumption. At a minimum, we must acknowledge that any scenario about what the world will be like in 2050 that simply postulates the absence human-level artificial intelligence is making a big assumption that could well turn out to be false.

It is therefore important to consider the alternative possibility, that intelligent machines will be built within fifty years. In the past year or two, there have been several books and articles published by leading researchers in artificial intelligence and robotics that argue for precisely that projection. This essay will first outline some of the reasons for this, and then discuss some of the consequences of human-level artificial intelligence.

We can get a grasp of the issue by considering the three things that are needed for an effective artificial intelligence. These are: hardware, software, and input/output mechanisms.

The requisite input/output technology already exists. We have video cameras, speakers, robotic arms etc. that provide a rich variety of ways for a computer to interact with its environment. So this part is trivial.

The hardware problem is more challenging. Speed rather than memory seems to be the limiting factor. We can make a guess at the computer hardware that will be needed by estimating the processing power of a human brain. We get somewhat different figures depending on what method we use and what degree of optimisation we assume, but typical estimates range from 100 million MIPS to 100 billion MIPS. (1 MIPS = 1 Million Instructions Per Second). A high-range PC today has about one thousand MIPS. The most powerful supercomputer to date performs at about 10 million MIPS. This means that we will soon be within striking distance from meeting the hardware requirements for human-level artificial intelligence. In retrospect, it is easy to see why the early artificial intelligence efforts in the sixties and seventies could not possibly have succeeded - the hardware available then was pitifully inadequate. It is no wonder that human-level intelligence was not attained using less-than-cockroach level of processing power.

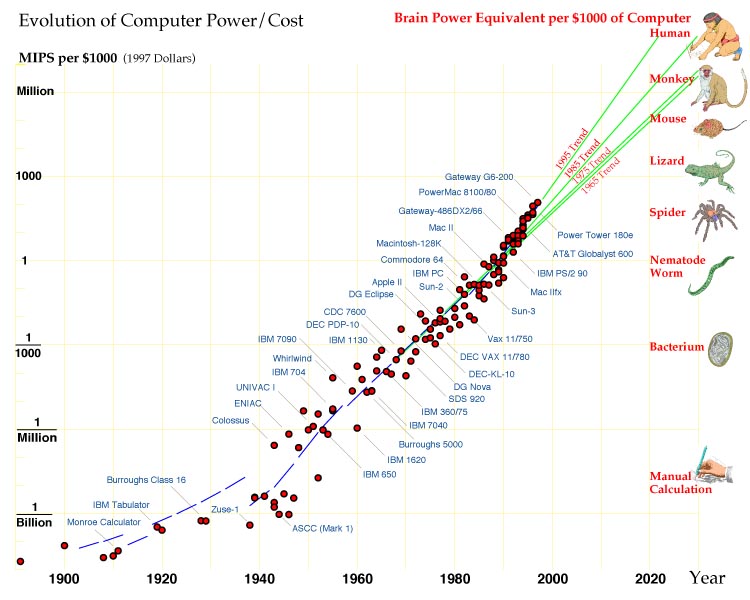

Turning our gaze forward, we can predict with a rather high degree of confidence that hardware matching that of the human brain will be available in the foreseeable future. IBM is currently working on a next-generation supercomputer, Blue Gene, which will perform over 1 billion MIPS. This computer is expected to be ready around 2005. We can extrapolate beyond this date using Moore's Law, which describes the historical growth rate of computer speed. (Strictly speaking, Moore's Law as originally formulated was about the density of transistors on a computer chip, but this has been closely correlated with processing power.) For the past half century, computing power has doubled every eighteen months to two years [see fig. 1]. Moore's Law is really not a law at all, but merely an observed regularity. In principle, it could stop holding true at any point in time. Nevertheless, the trend it depicts has been going strong for a very extended period of time and it has survived several transitions in the underlying technology (from relays to vacuum tubes, to transistors, to integrated circuits, to Very Large Integrated Circuits, VLSI). Chip manufacturers rely on it when they plan their forthcoming product lines. It is therefore reasonable to suppose that it may continue to hold for some time. Using a conservative doubling time of two years, Moore's law predicts that the upper-end estimate of the human brain's processing power will be reached before 2019. Since this represents the performance of the best supercomputer in the world, one may add a few years to account for the delay that may occur before that level of computing power becomes available for doing experimental work in artificial intelligence. The exact numbers don't matter much here. The point is that human-level computing power has not been reached yet, but almost certainly will be attained well before 2050.

This leaves the software problem. It is harder to analyse in a rigorous way how long it will take to solve that problem. (Of course, this holds equally for those who feel confident that artificial intelligence will remain unobtainable for an extremely long time - in the absence of evidence, we should not rule out either alternative.) Here we will approach the issue by outlining two approaches to creating the software, and presenting some general plausibility-arguments for why they could work.

We know that the software problem can be solved in principle. After all, humans have achieved human-level intelligence, so it is evidently possible. One way to build the requisite software is to figure out how the human brain works, and copy nature's solution.It is only relatively recently that we have begun to understand the computational mechanisms of biological brains. Computational neuroscience is only about fifteen years old as an active research discipline. In this short time, substantial progress has been made. We are beginning to understand early sensory processing. There are reasonably good computational models of primary visual cortex, and we are working our way up to the higher stages of visual cognition. We are uncovering what the basic learning algorithms are that govern how the strengths of synapses are modified by experience. The general architecture of our neuronal networks is being mapped out as we learn more about the interconnectivity between neurones and how different cortical areas project onto to one another. While we are still far from understanding higher-level thinking, we are beginning to figure out how the individual components work and how they are hooked up.

Assuming continuing rapid progress in neuroscience, we can envision learning enough about the lower-level processes and the overall architecture to begin to implement the same paradigms in computer simulations. Today, such simulations are limited to relatively small assemblies of neurones. There is a silicon retina and a silicon cochlea that do the same things as their biological counterparts. Simulating a whole brain will of course require enormous computing power; but as we saw, that capacity will be available within a couple of decades.

The product of this biology-inspired method will not be an explicitly coded mature artificial intelligence. (That is what the so-called classical school of artificial intelligence unsuccessfully tried to do.) Rather, it will be system that has the same ability as a toddler to learn from experience and to be educated. The system will need to be taught in order to attain the abilities of adult humans. But there is no reason why the computational algorithms that our biological brains use would not work equally well when implemented in silicon hardware.

Another, more "science-fiction-like" approach has been suggested by some nanotechnology researchers (e.g. Merkle[4]). Molecular nanotechnology is the anticipated future ability to manufacture a wide range of macroscopic structures (including new materials, computers, and other complex gadgetry) to atomic precision. Nanotechnology will give us unprecedented control over the structure of matter. One application that has been proposed is to use nano-machines to disassemble a frozen or vitrified human brain, registering the position of every neurone and synapse and other relevant parameters. This could be viewed as the cerebral analogue to the human genome project. With a sufficiently detailed map of a particular human brain, and an understanding of how the various types of neurones behave, one could emulate the scanned brain on a computer by running a fine-grained simulation of its neural network. This method has the advantage that it would not require any insight into higher-level human cognition. It's a purely bottom-up process.

These are two strategies for building the software for a human-level artificial intelligence that we can envision today. There may be other ways that we have not yet thought of that will get us there faster. Although it is impossible to make rigorous predictions regarding the time-scale of these developments, it seems reasonable to take seriously the possibility that all the prerequisites for intelligent machines - hardware, input/output mechanisms, and software - will be attained within fifty years.

In thinking about the world in the mid-21st century, we should therefore consider the ramifications of human-level artificial intelligence. Four immediate implications are:

An artificial intelligence is based on software, and it can therefore be copied as easily as any other computer program. Apart from hardware requirements, the marginal cost of creating an additional artificial intelligence after you have built the first one is close to zero. Artificial minds could therefore quickly come to exist in great numbers, amplifying the impact of the initial breakthrough.

There is a temptation to stop the analysis at the point where human-level machine intelligence appears, since that by itself is quite a dramatic development. But doing so is to miss an essential point that makes artificial intelligence a truly revolutionary prospect, namely, that it can be expected to lead to the creation of machines with intellectual abilities that vastly surpass those of any human. We can predict with great confidence that this second step will follow, although the time-scale is again somewhat uncertain. If Moore's law continues to hold in this era, the speed of artificial intelligences will double at least every two years. Within fourteen years after human-level artificial intelligence is reached, there could be machines that think more than a hundred times more rapidly than humans do. In reality, progress could be even more rapid than that, because there would likely be parallel improvements in the efficiency of the software that these machines use. The interval during which the machines and humans are roughly matched will likely be brief. Shortly thereafter, humans will be unable to compete intellectually with artificial minds.

Artificial intelligence is a true general-purpose technology. It enables applications in a very wide range of other fields. In particular, scientific and technological research (as well as philosophical thinking) will be done more effectively when conducted by machines that are cleverer than humans. One can therefore expect that overall technological progress will be rapid.

Machine intelligences may devote their abilities to designing the next generation of machine intelligence. This next generation will be even smarter and might be able to design their successors in even shorter time. Some authors have speculated that this positive feedback loop will lead to a "singularity" - a point where technological progress becomes so rapid that genuine superintelligence, with abilities unfathomable to mere humans, is attained within a short time span (Vinge[5]). However, it may turn out that there are diminishing returns in artificial intelligence research when some point is reached. Maybe once the low-hanging fruits have been picked, it gets harder and harder to make further improvement. There seems to be no clear way of predicting which way it will go.

It would be a mistake to conceptualise machine intelligence as a mere tool. Although it may be possible to build special-purpose artificial intelligence that could only think about some restricted set of problems, we are considering here a scenario in which machines with general-purpose intelligence are created. Such machines would be capable of independent initiative and of making their own plans. Such artificial intellects are perhaps more appropriately viewed as persons than machines. In economics lingo, they might come to be classified not as capital but as labour. If we can control the motivations of the artificial intellects that we design, they could come to constitute a class of highly capable "slaves" (although that term might be misleading if the machines don't want to do anything other than serve the people who built or commissioned them). The ethical and political debates surrounding these issues will likely become intense as the prospect of artificial intelligence draws closer.

Two overarching conclusions can be drawn. The first is that there is currently no warrant for dismissing the possibility that machines with greater-than-human intelligence will be built within fifty years. On the contrary, we should recognise this as a possibility that merits serious attention. The second conclusion is that the creation of such artificial intellects will have wide-ranging consequences for almost all the social, political, economic, commercial, technological, scientific and environmental issues that humanity will confront in this century.

Acknowledgments

I'd like to thank all those who have commented on earlier versions of this paper. The very helpful suggestions by Hal Finney, Robin Hanson, Carl Feynman, Anders Sandberg, and Peter McCluskey were especially appreciated.

Go to Nick Bostrom's Home Page

Figure 1: The exponential growth in computing power. (From Hans Moravec: "When will computer hardware match the human brain?" (1998, Jour. of Transhumanism, Vol.1); courtesy of the World Transhumanist Association) [back]